Artificial Intelligence (AI) has progressed from basic, rule-based systems to more complicated, deep learning systems and now to agentic AI that acts autonomously in dynamic contexts. For purposes of understanding this evolution, it is useful to consider a rational agent in AI, who is able to perceive its environment, reason about its perceptions, and therefore make an intentional decision to act in order to solve a problem and achieve objective goals. Accordingly, understanding what a rational agent is, how a rational agent works, and why this is important will help the reader appreciate how AI systems that exist today are making decisions, engaging in planning, and acting when faced with uncertain or complex situations.

What Is a Rational Agent in AI?

A rational agent in the field of artificial intelligence is an autonomous entity that detects its environment, makes inferences based on its observations, and performs actions to enhance the probability of achieving a particular goal.

Formally, a rational agent is defined as:

An AI system that selects actions expected to maximize its performance measure, given its knowledge and the environment.

In simpler terms, a rational agent does not just act. It acts for a reason. Each action is an outcome based on evaluating alternatives and selecting the action that maximizes the expected outcome.

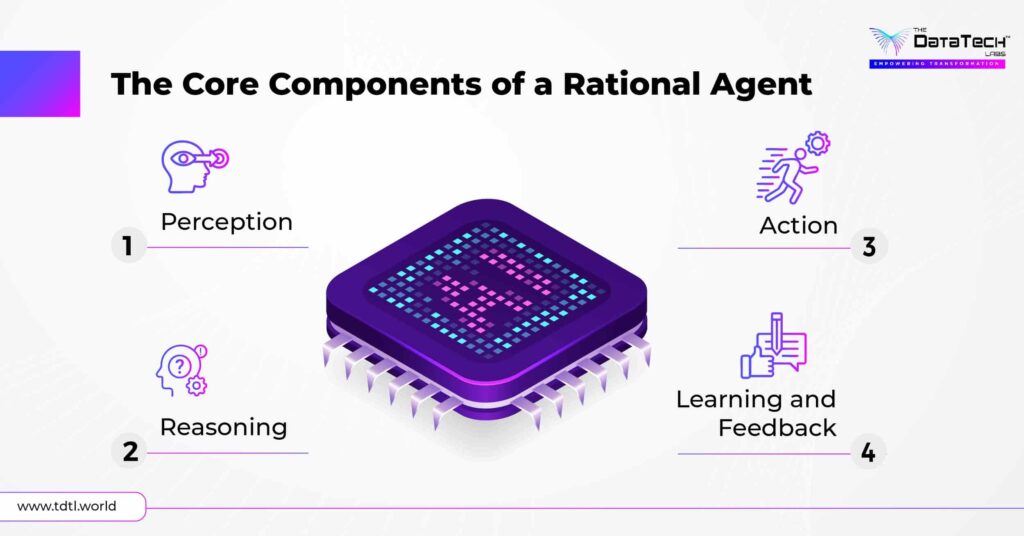

The Core Components of a Rational Agent

To understand how rational agents function, it’s helpful to consider them as four basic elements:

- Perception: The agent receives information from its environment via its sensors (digital inputs, via APIs, or data streams).

- Reasoning: The agent reasons using algorithms, models, or heuristics to understand what is going on based on the gathered information.

- Action: The agent acts based on the reasoning it performed and executes tasks or modifies things in its environment via actuators or digital outputs.

- Learning and Feedback: The agent assesses the effects of the action and optimizes its approach for next time, which represents learning.

The perception → reasoning → action → feedback thinking is what allows rational agents to be more than merely reactive and/or static.

How Rational Agents Differ From Traditional AI Systems

Before rational agents, AI systems often operated on fixed rules or static models. They followed instructions but couldn’t adapt when conditions changed.

Rational agents, by contrast, are designed to:

| Traditional AI | Rational Agent AI |

| Executes predefined rules | Evaluates options dynamically |

| Lacks awareness of environment | Continuously perceives environment |

| Requires constant human input | Acts autonomously to achieve goals |

| Limited to static data | Learns and improves from outcomes |

This difference is what makes rational agents the foundation of agentic AI, where systems can make multi-step decisions independently.

Types of Rational Agents in AI

Rational agents can be categorized based on their complexity and the intelligence embedded within them.

- Simple Reflex Agents

- React to current conditions using “if–then” rules.

- Example: A thermostat turning off heating when a temperature threshold is met.

- Model-Based Reflex Agents

- Maintain an internal model of the world to make better decisions.

- Example: A self-driving car that updates its understanding of road conditions in real time.

- Goal-Based Agents

- Make decisions that bring them closer to achieving a defined goal.

- Example: An AI assistant planning an optimal travel itinerary.

- Utility-Based Agents

- Evaluate multiple options and select the one that maximizes expected utility or satisfaction.

- Example: A trading bot that chooses the best investment strategy to balance profit and risk.

- Learning Agents

- Continuously improve performance by learning from experiences and outcomes.

- Example: A recommendation engine that refines suggestions based on user behavior over time.

Why Rational Agents Matter in Modern AI

The rational agent model underpins the design of autonomous, intelligent, and adaptive systems. Here’s why it’s strategically important:

- Decision-Making Under Uncertainty

Rational agents can operate effectively even when data is incomplete or noisy, using probabilistic reasoning or predictive models. - Scalable Autonomy

When multiple rational agents collaborate (as in multi-agent systems), they can manage large-scale operations, from logistics to cybersecurity with minimal human oversight. - Dynamic Optimization

Rational agents optimize continuously, adapting strategies in real time to maximize outcomes, whether financial, operational, or strategic. - Foundation of Agentic AI

Agentic AI builds on the principles of rational agency such as perception, autonomy, and feedback, extending them into multi-step, goal-driven environments.

Real-World Examples of Rational Agents in Action

- Autonomous Vehicles: Self-driving systems use sensors and models to decide how to navigate roads safely and efficiently maximizing passenger safety (utility).

- AI-Powered Trading Systems: Trading agents evaluate market data, risk thresholds, and investment goals to execute trades aligned with desired outcomes.

- Customer Service Bots: Beyond simple chat responses, advanced bots assess context, escalate issues, and act within CRM systems to resolve queries efficiently.

- Healthcare Decision Agents: Rational agents in healthcare analyze patient data to recommend treatments while balancing risks and benefits.

- Energy Management Systems: Smart grids deploy rational agents to optimize power distribution based on fluctuating supply and demand.

Challenges and Ethical Considerations

While rational agents offer immense potential, their autonomy raises important questions:

- Goal Alignment: Ensuring that agents’ goals align with human or organizational values.

- Transparency: Understanding why a rational agent made a decision is crucial for trust and accountability.

- Data Dependence: Poor or biased data can lead to irrational outcomes despite the agent’s logical framework.

- Ethical Boundaries: Rationality must be balanced with fairness, safety, and human oversight.

The Future of Rational Agents in Agentic AI

Rational agents are not just academic concepts, they are becoming building blocks of the agentic AI movement.

In the near future:

- Rational agents will form networks that negotiate, cooperate, and self-organize to achieve complex objectives.

- Hybrid systems will merge rational reasoning with generative capabilities enabling agents that can both create and decide.

- Enterprises will increasingly deploy rational agents to automate strategic workflows, from forecasting to risk management.

Ultimately, the rational agent model provides the logic, structure, and intentionality that make AI systems not only intelligent but purposeful.

Key Takeaway

A rational agent in AI is more than just a programmed system; it’s the foundation for AI that can perceive, reason, learn, and act autonomously.

As organizations move from generative AI to agentic AI, understanding rational agents is essential to designing intelligent systems that think and decide, not just compute and respond.

FAQ's

In AI, rational doesn’t mean “perfect”, it means acting in a way that maximizes expected performance based on available information. A rational agent chooses the best possible action given what it knows at the time.

A reactive agent responds to stimuli without considering long-term goals or outcomes, while a rational agent reasons about future consequences and selects actions that optimize results.

Not necessarily. Some AI systems follow fixed rule sets or heuristics that don’t always optimize performance. Rational agents, by contrast, use reasoning or learning to continuously align actions with objectives.

Rational agents are the building blocks of agentic AI. They provide the foundation for autonomy, multi-step reasoning, and decision-making, enabling AI systems to operate as collaborators rather than just tools.

Rational agents can be programmed with ethical or value-based constraints, but true ethical reasoning still depends on human oversight. They can weigh trade-offs logically but require human input to define what’s “right” or “acceptable.”

They use probabilistic models, reinforcement learning, or utility-based reasoning to make the best possible decision under uncertainty, choosing the action with the highest expected utility even when information is limited.

Rational agents are used in autonomous vehicles, financial trading systems, supply chain management, smart grids, and digital assistants wherever autonomous decision-making is required.

Brandon

Excellent article on rational agents! It clearly explains how AI systems make decisions to maximize their performance measure. This makes me think about real-world applications in healthcare, where optimizing outcomes is critical. I was researching medications and came across a drug called Ratinal (Ranitidine Hydrochloride) used for treating certain gastrointestinal conditions. Given the importance of rational decision-making in pharmacology and treatment optimization, I’m curious: How could a rational agent AI system be designed to evaluate the efficacy and potential side effects of such a medication, like the one detailed here https://pillintrip.com/medicine/ratinal, to assist doctors in personalized treatment plans that maximize patient utility while minimizing risks?

What is an Intelligent Agent in AI? Understanding Types, Functions, and Rational Behavior - Blogs

[…] possibilities, adapting, and learning from interactions. An example of this in real life would be a rational AI trading agent in capital […]